The Wholesale Sedation of America’s Youth

Print This Print This

By Andrew M. Weiss

Skeptical Inquiry

Wednesday, May 6, 2009

In 1950, approximately 7,500 children in the United States were diagnosed with mental disorders. That number is at least eight million today, and most receive some form of medication.

Is this progress or child abuse?

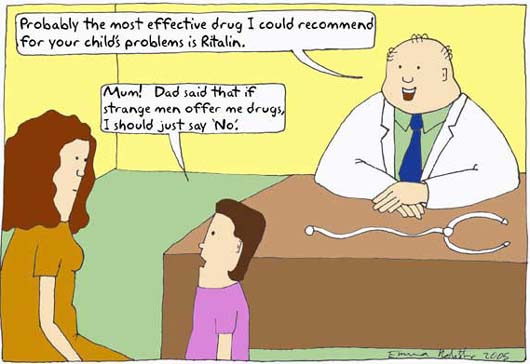

In the winter of 2000, the Journal of the American Medical Association published the results of a study indicating that 200,000 two-to four-year-olds had been prescribed Ritalin for an “attention disorder” from 1991 to 1995. Judging by the response, the image of hundreds of thousands of mothers grinding up stimulants to put into the sippy cups of their preschoolers was apparently not a pretty one. Most national magazines and newspapers covered the story; some even expressed dismay or outrage at this exacerbation of what already seemed like a juggernaut of hyper-medicalizing childhood. The public reaction, however, was tame; the medical community, after a moment’s pause, continued unfazed. Today, the total toddler count is well past one million, and influential psychiatrists have insisted that mental health prescriptions are appropriate for children as young as twelve months. For the pharmaceutical companies, this is progress.

|

| Today, eight million US children are diagnosed with mental illness, and most receive some form of medication. |

In 1995, 2,357,833 children were diagnosed with ADHD (Woodwell 1997)—twice the number diagnosed in 1990. By 1999, 3.4 percent of all American children had received a stimulant prescription for an attention disorder. Today, that number is closer to ten percent. Stimulants aren’t the only drugs being given out like candy to our children. A variety of other psychotropics like antidepressants, antipsychotics, and sedatives are finding their way into babies’ medicine cabinets in large numbers. In fact, the worldwide market for these drugs is growing at a rate of ten percent a year, $20.7 billion in sales of antipsychotics alone (for 2007, IMSHealth 2008).

While the sheer volume of psychotropics being prescribed for children might, in and of itself, produce alarm, there has not been a substantial backlash against drug use in large part because of the widespread perception that “medically authorized” drugs must be safe. Yet, there is considerable evidence that psychoactive drugs do not take second place to other controlled pharmaceuticals in carrying grave and substantial risks. All classes of psychoactive drugs are associated with patient deaths, and each produces serious side effects, some of which are life-threatening.

In 2005, researchers analyzed data from 250,000 patients in the Netherlands and concluded that “we can be reasonably sure that antipsychotics are associated in something like a threefold increase in sudden cardiac death, and perhaps that older antipsychotics may be worse” (Straus et al. 2004). In 2007, the FDA chose to beef up its black box warning (reserved for substances that represent the most serious danger to the public) against antidepressants concluding, “the trend across age groups toward an association between antidepressants and suicidality . . . was convincing, particularly when superimposed on earlier analyses of data on adolescents from randomized, controlled trials” (Friedman and Leon 2007). Antidepressants have been banned for use with children in the UK since 2003. According to a confidential FDA report, prolonged administration of amphetamines (the standard treatment for ADD and ADHD) “may lead to drug dependence and must be avoided.” They further reported that “misuse of amphetamine may cause sudden death and serious cardiovascular adverse events” (Food and Drug Administration 2005). The risk of fatal toxicity from lithium carbonate, a not uncommon treatment for bipolar disorder, has been well documented since the 1950s. Incidents of fatal seizures from sedative-hypnotics, especially when mixed with alcohol, have been recorded since the 1920s.

|

Psychotropics carry nonfatal risks as well. Physical dependence and severe withdrawal symptoms are associated with virtually all psychoactive drugs. Psychological addiction is axiomatic. Concomitant side effects range from unpleasant to devastating, including: insulin resistance, narcolepsy, tardive dyskenisia (a movement disorder affecting 15–20 percent of antipsychotic patients where there are uncontrolled facial movements and sometimes jerking or twisting movements of other body parts), agranulocytosis (a reduction in white blood cells, which is life threatening), accelerated appetite, vomiting, allergic reactions, uncontrolled blinking, slurred speech, diabetes, balance irregularities, irregular heartbeat, chest pain, sleep disorders, fever, and severe headaches. The attempt to control these side effects has resulted in many children taking as many as eight additional drugs every day, but in many cases, this has only compounded the problem. Each “helper” drug produces unwanted side effects of its own.

The child drug market has also spawned a vigorous black market in high schools and colleges, particularly for stimulants. Students have learned to fake the symptoms of ADD in order to obtain amphetamine prescriptions that are subsequently sold to fellow students. Such “shopping” for prescription drugs has even spawned a new verb. The practice is commonly called “pharming.” A 2005 report from the Partnership for a Drug Free America, based on a survey of more than 7,300 teenagers, found one in ten teenagers, or 2.3 million young people, had tried prescription stimulants without a doctor’s order, and 29 percent of those surveyed said they had close friends who have abused prescription stimulants.

In a larger sense, the whole undertaking has had the disturbing effect of making drug use an accepted part of childhood. Few cultures anywhere on earth and anytime in the past have been so willing to provide stimulants and sedative-hypnotics to their offspring, especially at such tender ages. An entire generation of young people has been brought up to believe that drug-seeking behavior is both rational and respectable and that most psychological problems have a pharmacological solution. With the ubiquity of psychotropics, children now have the means, opportunity, example, and encouragement to develop a lifelong habit of self-medicating.

Common population estimates include at least eight million children, ages two to eighteen, receiving prescriptions for ADD, ADHD, bipolar disorder, autism, simple depression, schizophrenia, and the dozens of other disorders now included in psychiatric classification manuals. Yet sixty years ago, it was virtually impossible for a child to be considered mentally ill. The first diagnostic manual published by American psychiatrists in 1952, DSM-I, included among its 106 diagnoses only one for a child: Adjustment Reaction of Childhood/Adolescence. The other 105 diagnoses were specifically for adults. The number of children actually diagnosed with a mental disorder in the early 1950s would hardly move today’s needle. There were, at most, 7,500 children in various settings who were believed to be mentally ill at that time, and most of these had explicit neurological symptoms.

Of course, if there really are one thousand times as many kids with authentic mental disorders now as there were fifty years ago, then the explosion in drug prescriptions in the years since only indicates an appropriate medical response to a newly recognized pandemic, but there are other possible explanations for this meteoric rise. The last fifty years has seen significant social changes, many with a profound effect on children. Burgeoning birth rates, the decline of the extended family, widespread divorce, changing sexual and social mores, households with two working parents—it is fair to say that the whole fabric of life took on new dimensions in the last half century. The legal drug culture, too, became an omnipresent adjunct to daily existence. Stimulants, analgesics, sedatives, decongestants, penicillins, statins, diuretics, antibiotics, and a host of others soon found their way into every bathroom cabinet, while children became frequent visitors to the family physician for drugs and vaccines that we now believe are vital to our health and happiness. There is also the looming motive of money. The New York Times reported in 2005 that physicians who had received substantial payments from pharmaceutical companies were five times more likely to prescribe a drug regimen to a child than those who had refused such payments.

|

So other factors may well have contributed to the upsurge in psychiatric diagnoses over the past fifty years. But even if the increase reflects an authentic epidemic of mental health problems in our children, it is not certain that medication has ever been the right way to handle it. The medical “disease” model is one approach to understanding these behaviors, but there are others, including a hastily discarded psychodynamic model that had a good record of effective symptom relief. Alternative, less invasive treatments, too, like nutritional treatments, early intervention, and teacher and parent training programs were found to be at least as effective as medication in long-term reduction of a variety of symptoms (of ADHD, The MTA Cooperative Group 1999).

Nevertheless, the medical-pharmaceutical alliance has largely shrugged off other approaches and scoffed at the potential for conflicts of interest and continues to medicate children in ever-increasing numbers. With the proportion of diagnosed kids growing every month, it may be time to take another look at the practice and soberly reflect on whether we want to continue down this path. In that spirit, it is not unreasonable to ask whether this exponential expansion in medicating children has another explanation altogether. What if children are the same as they always were? After all, virtually every symptom now thought of as diagnostic was once an aspect of temperament or character. We may not have liked it when a child was sluggish, hyperactive, moody, fragile, or pestering, but we didn’t ask his parents to medicate him with powerful chemicals either. What if there is no such thing as mental illness in children (except the small, chronic, often neurological minority we once recognized)? What if it is only our perception of childhood that has changed? To answer this, we must look at our history and at our nature.

The human inclination to use psychoactive substances predates civilization. Alcohol has been found in late Stone Age jugs; beer may have been fermented before the invention of bread. Nicotine metabolites have been found in ancient human remains and in pipes in the Near East and Africa. Knowledge of Hul Gil, the “joy plant,” was passed from the Sumerians, in the fifth millennium b.c.e., to the Assyrians, then in serial order to the Babylonians, Egyptians, Greeks, Persians, Indians, then to the Portuguese who would introduce it to the Chinese, who grew it and traded it back to the Europeans. Hul Gil was the Sumerian name for the opium poppy. Before the Middle Ages, economies were established around opium, and wars were fought to protect avenues of supply.

With the modern science of chemistry in the nineteenth century, new synthetic substances were developed that shared many of the same desirable qualities as the more traditional sedatives and stimulants. The first modern drugs were barbiturates—a class of 2,500 sedative/hypnotics that were first synthesized in 1864. Barbiturates became very popular in the U.S. for depression and insomnia, especially after the temperance movement resulted in draconian anti-drug legislation (most notoriously Prohibition) just after World War I. But variety was limited and fears of death by convulsion and the Winthrop drug-scare kept barbiturates from more general distribution.

Stimulants, typically caffeine and nicotine, were already ubiquitous in the first half of the twentieth century, but more potent varieties would have to wait until amphetamines came into widespread use in the 1930s. Amphetamines were not widely known until the 1920s and 1930s when they were first used to treat asthma, hay fever, and the common cold. In 1932, the Benzedrine Inhaler was introduced to the market and was a huge over-the-counter success. With the introduction of Dexedrine in the form of small, cheap pills, amphetamines were prescribed for depression, Parkinson’s disease, epilepsy, motion sickness, night-blindness, obesity, narcolepsy, impotence, apathy, and, of course, hyperactivity in children.

Amphetamines came into still wider use during World War II, when they were given out freely to GIs for fatigue. When the GIs returned home, they brought their appetite for stimulants to their family physicians. By 1962, Americans were ingesting the equivalent of forty-three ten-milligram doses of amphetamine per person annually (according to FDA manufacturer surveys).

Still, in the 1950s, the family physician’s involvement in furnishing psychoactive medications for the treatment of primarily psychological complaints was largely sub rosa. It became far more widespread and notorious in the 1960s. There were two reasons for this. First, a new, safer class of sedative hypnotics, the benzodiazepines, including Librium and Valium, were an instant sensation, especially among housewives who called them “mothers’ helpers.” Second, amphetamines had finally been approved for use with children (their use up to that point had been “off-label,” meaning that they were prescribed despite the lack of FDA authorization).

Pharmaceutical companies, coincidentally, became more aggressive in marketing their products with the tremendous success of amphetamines. Valium was marketed directly to physicians and indirectly through a public relations campaign that implied that benzodiazepines offered sedative/hypnotic benefits without the risk of addiction or death from drug interactions or suicide. Within fifteen years of its introduction, 2.3 billion Valium pills were being sold annually in the U.S. (Sample 2005).

So, family physicians became society’s instruments: the suppliers of choice for legal mood-altering drugs. But medical practitioners required scientific authority to protect their reputations, and the public required a justification for its drug- seeking behavior. The pharmaceutical companies were quick to offer a pseudoscientific conjecture that satisfied both. They argued that neurochemical transmitters, only recently identified, were in fact the long sought after mediators of mood and activity. Psychological complaints, consequently, were a function of an imbalance of these neural chemicals that could be corrected with stimulants and sedatives (and later antidepressants and antipsychotics). While the assertion was pure fantasy without a shred of evidence, so little was known about the brain’s true actions that the artifice was tamely accepted. This would later prove devastating when children became the targets of pharmaceutical expansion.

With Ritalin’s FDA approval for the treatment of hyperactivity in children, the same marketing techniques that had been so successful with other drugs were applied to the new amphetamine. Pharmaceutical companies had a vested interest in the increase in sales; they spared no expense in convincing physicians to prescribe them. Cash payments, stock options, paid junkets, no-work consultancies, and other inducements encouraged physicians to relax their natural caution about medicating children. Parents also were targeted. For example, CIBA, the maker of Ritalin, made large direct payments to parents’ support groups like CHADD (Children and Adults with Attention Deficit/Hyperactivity Disorder) (The Merrow Report 1995). To increase the acceptance of stimulants, drug companies paid researchers to publish favorable articles on the effectiveness of stimulant treatments. They also endowed chairs and paid for the establishment of clinics in influential medical schools, particularly ones associated with universities of international reputation. By the mid 1970s, more than half a million children had already been medicated primarily for hyperactivity.

|

The brand of psychiatry that became increasingly popular in the 1980s and 1990s did not have its roots in notions of normal behavior or personality theory; it grew out of the concrete, atheoretical treatment style used in clinics and institutions for the profoundly disturbed. German psychiatrist Emil Kraepelin, not Freud, was the God of mental hospitals, and pharmaceuticals were the panacea. So the whole underlying notion of psychiatric treatment, diagnosis, and disease changed. Psychiatry, which had straddled psychology and medicine for a hundred years, abruptly abandoned psychology for a comfortable sinecure within its traditional parent discipline. The change was profound.

People seeking treatment were no longer clients, they were patients. Their complaints were no longer suggestive of a complex mental organization, they were symptoms of a disease. Patients were not active participants in a collaborative treatment, they were passive recipients of symptom-reducing substances. Mental disturbances were no longer caused by unique combinations of personality, character, disposition, and upbringing, they were attributed to pre-birth anomalies that caused vague chemical imbalances. Cures were no longer anticipated or sought; mental disorders were inherited illnesses, like birth defects, that could not be cured except by some future magic, genetic bullet. All that could be done was to treat symptoms chemically, and this was being done with astonishing ease and regularity.

In many ways, children are the ideal patients for drugs. By nature, they are often passive and compliant when told by a parent to take a pill. Children are also generally optimistic and less likely to balk at treatment than adults. Even if they are inclined to complain, the parent is a ready intermediary between the physician and the patient. Parents are willing to participate in the enforcement of treatments once they have justified them in their own minds and, unlike adults, many kids do not have the luxury of discontinuing an unpleasant medication. Children are additionally not aware of how they ought to feel. They adjust to the drugs’ effects as if they are natural and are more tolerant of side effects than adults. Pharmaceutical companies recognized these assets and soon were targeting new drugs specifically at children.

But third-party insurance providers balked at the surge in costs for treatment of previously unknown, psychological syndromes, especially since unwanted drug effects were making some cases complicated and expensive. Medicine’s growing prosperity as the purveyor of treatments for mental disorders was threatened, and the industry’s response was predictable. Psychiatry found that it could meet insurance company requirements by simplifying diagnoses, reducing identification to the mere appearance of certain symptoms. By 1980, they had published all new standards.

|

Lost in the process was the fact that the redefined diagnoses (and a host of new additions) failed to meet minimal standards of falsifiability and differentiability. This meant that the diagnoses could never be disproved and that they could not be indisputably distinguished from one another. The new disorders were also defined as lists of symptoms from which a physician could check off a certain number of hits like a Chinese menu, which led to reification, an egregious scientific impropriety. Insurers, however, with their exceptions undermined and under pressure from parents and physicians, eventually withdrew their objections. From that moment on, the treatment of children with powerful psychotropic medications grew unchecked.

As new psychotropics became available, their uses were quickly extended to children despite, in many cases, indications that the drugs were intended for use with adults only. New antipsychotics, the atypicals, were synthesized and marketed beginning in the 1970s. Subsequently, a new class of antidepressants like Prozac and Zoloft was introduced. These drugs were added to the catalogue of childhood drug treatments with an astonishing casualness even as stimulant treatment for hyperactivity continued to burgeon.

In 1980, hyperactivity, which had been imprudently named “minimal brain dysfunction” in the 1960s, was renamed Attention Deficit Disorder in order to be more politic, but there was an unintended consequence of the move. Parents and teachers, familiar with the name but not always with the symptoms, frequently misidentified children who were shy, slow, or sad (introverted rather than inattentive) as suffering from ADD. Rather than correct the mistake, though, some enterprising physicians responded by prescribing the same drug for the opposite symptoms. This was justified on the grounds that stimulants, which were being offered because they slowed down hyperactive children, might very well have the predicted effect of speeding up under -active kids. In this way, a whole new population of children became eligible for medication. Later, the authors of DSM-III memorialized this practice by renaming ADD again, this time as ADHD, and redefining ADD as inattention. Psychiatry had reached a new level: they were now willing to invent an illness to justify a treatment. It would not be the last time this was done.

In the last twenty years, a new, more disturbing trend has become popular: the re-branding of legacy forms of mental disturbance as broad categories of childhood illness. Manic depressive illness and infantile autism, two previously rare disorders, were redefined through this process as “spectrum” illnesses with loosened criteria and symptom lists that cover a wide range of previously normal behavior. With this slim justification in place, more than a million children have been treated with psychotropics for bipolar disorder and another 200,000 for autism. A recent article in this magazine “The Bipolar Bamboozle” (Flora and Bobby 2008) illuminates how and why an illness that once occurred twice in every 100,000 Americans, has been recast as an epidemic affecting millions.

To overwhelmed parents, drugs solve a whole host of ancillary problems. The relatively low cost (at least in out-of-pocket dollars) and the small commitment of time for drug treatments make them attractive to parents who are already stretched thin by work and home life. Those whose confidence is shaken by indications that their children are “out of control” or “unruly” or “disturbed” are soothed by the seeming inevitability of an inherited disease that is shared by so many others. Rather than blaming themselves for being poor home managers, guardians with insufficient skills, or neglectful caretakers, parents can find comfort in the thought that their child, through no fault of theirs, has succumbed to a modern and widely accepted scourge. A psychiatric diagnosis also works well as an authoritative response to demands made by teachers and school administrators to address their child’s “problems.”

Once a medical illness has been identified, all unwanted behavior becomes fruit of the same tree. Even the children themselves are often at first relieved that their asocial or antisocial impulses reflect an underlying disease and not some flaw in their characters or personalities.

Conclusions

In the last analysis, childhood has been thoroughly and effectively redefined. Character and temperament have been largely removed from the vocabulary of human personality. Virtually every single undesirable impulse of children has taken on pathological proportions and diagnostic significance. Yet, if the psychiatric community is wrong in their theories and hypotheses, then a generation of parents has been deluded while millions of children have been sentenced to a lifetime of ingesting powerful and dangerous drugs.

Considering the enormous benefits reaped by the medical community, it is no surprise that critics have argued that the whole enterprise is a cynical, reckless artifice crafted to unfairly enrich them. Even though this is undoubtedly not true, physicians and pharmaceutical companies must answer for the rush to medicate our most vulnerable citizens based on little evidence, a weak theoretical model, and an antiquated and repudiated philosophy. For its part, the scientific community must answer for its timidity in challenging treatments made in the absence of clinical observation and justified by research of insufficient rigor performed by professionals and institutions whose objectivity is clearly in question, because their own interests are materially entwined in their findings.

It should hardly be necessary to remind physicians that even if their diagnoses are real, they are still admonished by Galen’s dictum Primum non nocere, or “first, do no harm.” If with no other population, this ought to be our standard when dealing with children. Yet we have chosen the most invasive, destructive, and potentially lethal treatment imaginable while rejecting other options that show great promise of being at least as effective and far safer. But these other methods are more expensive, more complicated, and more time-consuming, and thus far, we have not proved willing to bear the cost. Instead, we have jumped at a discounted treatment, a soft-drink- machine cure: easy, cheap, fast, and putatively scientific. Sadly, the difference in price is now being paid by eight million children.

Mental illness is a fact of life, and it is naïve to imagine that there are not seriously disturbed children in every neighborhood and school. What is more, in the straitened economy of child rearing and education, medication may be the most efficient and cost effective treatment for some of these children. Nevertheless, to medicate not just the neediest, most complicated cases but one child in every ten, despite the availability of less destructive treatments and regardless of doubtful science, is a tragedy of epic proportions.

What we all have to fear, at long last, is not having been wrong but having done wrong. That will be judged in a court of a different sort. Instead of humility, we continue to feed drugs to our children with blithe indifference. Even when a child’s mind is truly disturbed (and our standards need to be revised drastically on this score), a treatment model that intends to chemically palliate and manage ought to be our last resort, not our first option. How many more children need to be sacrificed for us to see the harm in expediency, greed, and plain ignorance?

Andrew Weiss holds a PhD in school-clinical psychology

from Hofstra University. He served on the faculty of Iona College and

has been a senior school administrator in Chappaqua, New York. He has

published a number of articles on technology in education. E-mail:

anweiss [at] optonline.net.

References

- Flora, S.R., and S.E. Bobby, 2008. The Bipolar Bamboozle. Skeptical Inquirer 32(5): 41–45 (September/October).

- Food and Drug Administration. 2005. FDA Confidential NDA 11-522. Available online at www.fda.gov/MEDwatch/safety/2005/aug_PI/Adderall_PI.pdf.

- Friedman, R.A., and A.C. Leon. 2007. Expanding the black box—Depression, antidepressants, and the risk of suicide. New England Journal of Medicine, 356(23): 2343–2346.

- IMSHealth. 2007. Available online at http://imshealth.com/vgn/images/portal/CIT_40000873/56/43/83743772Top%2010%20Global%20Therapeutic%20Classes%202007.pdf.

- The Merrow Report. 1995. A.D.D.—A Dubious Diagnosis? PBS.

- The

MTA Cooperative Group. 1999. A 14-month randomized clinical trial of

treatment strategies for attention-deficit/hyperactivity disorder.

Archives of General Psychiatry, 56, 1073–1086.

- Sample, Ian. 2005. Leo Sternbach’s Obituary. The Guardian (Guardian Unlimited) Oct. 3.

- Straus, S.M., et al. 2004. Antipsychotics and the risk of sudden cardiac death. Archives of Internal Medicine, 164(12): 1293–1297.

- Woodwell, DA. 1997. 1995 Summary. National Hospital Ambulatory Medical Care Survey. May 8; 286, 1–25.

Skeptical Inquiry

Print This Print This

|